Introduction: Why Are Americans Concerned About AI?

Artificial intelligence (AI) has long sparked both excitement and fear. In popular American culture, movies like The Terminator, Ex Machina, and I, Robot depict AI as something that could rebel and threaten humanity. Influential figures such as Stephen Hawking and Elon Musk have voiced concerns that uncontrolled AI might eventually surpass human intelligence and act in unpredictable ways.

But in our daily lives, AI hasn’t taken the form of killer robots. Instead, it’s powering things like Siri, Alexa, Google search, social media feeds, healthcare diagnostics, and even Netflix recommendations. These applications make life easier, but they also raise important ethical and practical questions.

So what is AI really, and is it something we should be worried about—or something we can embrace? Let’s explore.

What Exactly Is Artificial Intelligence?

At its core, artificial intelligence refers to machines or software that can mimic human intelligence to perform tasks, learn from data, and make decisions without human intervention.

Simple Definition:

AI is a branch of computer science focused on building systems that can perform tasks such as reasoning, problem-solving, and learning—abilities traditionally associated with humans.

There are several key types of AI:

- Machine Learning (ML): Algorithms that learn from data and improve over time.

- Natural Language Processing (NLP): Understanding and responding to human language.

- Computer Vision: Interpreting visual data like images and video.

- Generative AI: Creating new content like art, music, and text.

A Closer Look: How AI Works

AI doesn’t have magic or intuition. It learns by example. Let’s break it down with a simple example:

Imagine you’re training an AI to identify birds. You feed it thousands of labeled images: eagles, pigeons, hummingbirds, and so on. The AI analyzes patterns like:

- Wing shape

- Beak size

- Color

- Leg structure

Each feature gets a weight. Over time and with feedback, the system learns to adjust those weights to improve accuracy.

Eventually, it can identify a new bird image it’s never seen before based on similarities. That’s AI in action—analyzing, learning, and making predictions.

This process underlies everything from facial recognition in your iPhone to spam filters in Gmail.

AI in Everyday American Life

Most Americans interact with AI daily—often without even realizing it. Here are some examples:

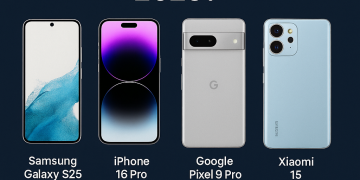

Smartphones

- Voice assistants like Siri or Google Assistant

- Predictive text in messages

- Facial recognition to unlock your device

Entertainment

- Netflix recommendations

- YouTube suggested videos

- Spotify’s Discover Weekly playlist

Social Media

- Facebook’s News Feed algorithm

- TikTok’s For You Page

- Instagram Reels suggestions

Finance

- Fraud detection in credit card companies

- Robo-advisors like Betterment or Wealthfront

- AI-driven stock trading platforms

Healthcare

- AI tools for early cancer detection

- Predictive analytics for hospital staffing

- Virtual health assistants

Travel and Transportation

- Google Maps predicting traffic

- Tesla’s Autopilot and Full Self-Driving (FSD)

- Airline scheduling systems

AI is also behind the scenes in agriculture, logistics, HR software, education platforms, and more.

The Black Box Problem: When AI Makes Mistakes

As AI becomes more complex, understanding how it reaches conclusions gets harder. This is known as the black box problem.

Real Example:

An AI trained to differentiate between dogs and wolves began misclassifying huskies as wolves—not because of facial features, but because most wolf images in its training data had snow in the background.

This shows that AI can learn the wrong patterns—and without transparency, it’s hard to correct these errors.

Can AI Be Biased?

Absolutely. AI is only as good as the data it’s trained on.

Real Example:

A major U.S. corporation used an AI recruiting tool that began favoring male applicants. Why? Because it was trained on 10 years of company data in which most hires were men.

Biased training data leads to biased decisions. That’s dangerous when AI is used for hiring, credit approval, policing, or healthcare.

To fix this, developers need to use diverse datasets and audit AI systems regularly.

Generative AI: The Rise of AI Creators

Generative AI is booming. These systems can create original content based on training data.

Examples Americans are using today:

- ChatGPT: Writing emails, job applications, or essays.

- DALL·E & Midjourney: Generating art and product mockups.

- Synthesia: Creating AI-generated video presenters.

- Descript: Cloning voices or editing video via transcript.

The possibilities are incredible—but so are the risks, such as deepfakes, fake news, and copyright confusion.

AI vs. Human Jobs in America

Automation isn’t new. The U.S. saw similar concerns during the Industrial Revolution, the rise of computers, and even during the internet boom.

Jobs Most at Risk:

- Customer service reps

- Data entry clerks

- Retail cashiers

- Telemarketers

Emerging AI-Era Jobs:

- Prompt engineers

- AI ethics consultants

- Data annotators

- AI product managers

What You Can Do:

Upskill. Tools like Coursera, edX, and LinkedIn Learning offer AI courses.

Leverage AI. Use it as a productivity booster, not a replacement.

Types of AI by Intelligence Level

Narrow AI (Most Common Today)

Performs one task well but can’t generalize.

Example: Google Maps or ChatGPT.

General AI (Still Theoretical)

Can think and learn like a human across many domains.

Example: None yet. Still a work in progress.

Superintelligent AI (Hypothetical)

Smarter than the best human brains in all respects.

Example: Think Ultron or Skynet. Total fiction—so far.

Should Americans Be Worried?

Let’s be realistic. AI isn’t going to suddenly turn into a rogue robot army. But there are valid concerns:

Real Threats:

- Job displacement

- Algorithmic bias

- Lack of transparency

- Misuse for surveillance or propaganda

Fictional Fears:

- AI “deciding” to eliminate humanity

- Robots with emotions

- Conscious AI plotting world domination

For now, these are science fiction—not science fact.

Government & Ethical Regulation in the U.S.

The U.S. is beginning to regulate AI more seriously:

- White House Blueprint for an AI Bill of Rights (2022)

- Federal Trade Commission (FTC) investigating AI fairness

- Biden’s executive order on AI safety and innovation (2023)

There’s still a long way to go. Most experts agree that transparency, explainability, and accountability are key.

FAQs for the American Reader

Is AI safe?

AI itself is neutral. Safety depends on how it’s built and who uses it.

Can AI think?

No. It mimics reasoning but has no consciousness.

How do I prepare for an AI-driven job market?

Learn digital skills, understand AI tools, and stay adaptable.

Is AI in schools a good idea?

It depends. AI can personalize learning, but it needs oversight to prevent bias or misuse.

Final Thoughts: AI Is a Tool—Not a Threat

AI is not good or evil. It’s powerful. And like any powerful tool—nuclear energy, the internet, or social media—how we use it defines its impact.

What Should You Do?

- Stay informed.

- Question AI-generated content.

- Vote for leaders who prioritize ethical AI.

- Use AI to improve—not replace—your human strengths.

👍 Like This Article?

Share it with your friends and family. Want more content like this?